CMU’s Eberly Center for Teaching Excellence has outstanding resources to support the faculty in their education research endeavors. They advocate an approach called Teaching as Research (TAR) that combines real-time teaching with on-the-fly research in education, for example to evaluate the effectiveness of a new teaching strategy while applying the strategy in a classroom setting.

TAR Workshops

Eberly Center’s interactive TAR workshops helps educators identify new teaching and learning strategies to introduce or existing teaching strategies to evaluate in their courses, pinpoint potential data sources, determine proper outcome measures, design classroom studies, and navigate ethical concerns and the the Institutional Review Board (IRB) approval process. Their approach builds on seven parts, each part addressing central questions:

- Identify a teaching or learning strategy that has the potential to impact student outcomes. What pedagogical problem is the said strategy trying to solve?

- What is the research question regarding the effect of the strategy considered on student outcomes? Or what do you want to know about it?

- What teaching intervention is associated with the strategy that will be implemented in the course as part of the study design? How will the intervention incorporate existing or new instructional techniques?

- What sources of data (i.e., direct measures) on student learning, engagement, and attitudes will the instructors leverage to answer the research question?

- What study design will the instructors use to investigate the research question? For example, will collecting data at multiple times (e.g., pre- and post-intervention) or from multiple groups (e.g., treatment and control) help address the research question?

- Which IRB protocols are most suitable for the study? For example, different protocols are available depending on whether the study relies on data sources embedded in normally required course work, whether student consent is required for activities not part of the required course work, and whether any personal information, such as student registrar data, is needed.

- What are the actionable outcomes of the study? How will the results affect future instructional approaches or interventions?

After reviewing relevant research methods, literature, and case studies in small groups to illustrate how the above points can be addressed, each participants identifies a TAR project. The participants have a few months to refine and rethink the project, after which the center folks follow up to come up with a concrete plan in collaboration with the faculty member.

Idea

I teach a graduate-level flipped-classroom course with colleague Cécile Péraire on Foundations of Software Engineering. We have been thinking about how to better incentivize the students to take assigned videos and other self-study study materials more seriously before attending live sessions. We wanted them to be better prepared for live session activities and also improve their uptake of the theory throughout the course. We had little idea about how effective the self-study videos and reading materials were. Once suggestion from the center folks was to use low stakes assessments with multiple components, which seemed like a good idea (and a lot of work). Cécile and I set out to implement this idea in the next offering, but we wanted to also measure and assess its impact.

Our TAR project

Based on the above idea, our TAR project, in terms of the seven questions, are summarized below.

- Learning strategy: Multi-part, short low-stakes assessments composed of an online pre-quiz taken by student just before reviewing a self-study component, a matching online post-quiz completed by student right after reviewing the self-study component, and an online in-class quiz on the same topic taken at the beginning of the next live session. The in-class quiz is immediately followed by a plenary session to review and discuss the answers. The assessments are low-stakes in that a student’s actual quiz performance (as measured by quiz scores) do not count towards the final grade, but taking the quizzes are mandatory and each quiz completed counts towards a student’s participation grade.

- Research question: Our research question is also multi-part. Are the self-study materials effective in conveying the targeted information? Do the low-stakes assessments help students retain the information given in self-study materials?

- Intervention: The new intervention here are the pre- and post-quizzes. The in-class quiz simply replaces and formalizes an alternate technique based on online polls and ensuing discussion used in previous offerings.

- Data sources: Low-stakes quiz scores, exam performance on matching topics, and basic demographic and background information collected through a project-team formation survey (already part of the course).

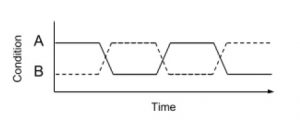

- Study design: We used a repeated-measures, multi-object design that introduces the the intervention (pre- and post-quizzes) to pseudo-randomly determined rotating subset of students. The students are divided into two groups each week: the intervention group A and the control group B. The groups are switched in alternating weeks. Thus each student ends up receiving the intervention in alternate weeks only, as shown in the figure below. The effectiveness of self-study materials will be evaluated by comparing pre- and post-quiz scores. The effectiveness of the intervention will be evaluated by comparing the performance of the control and intervention groups during in-class quizzes and related topics of course exams.

- IRB protocols: Because the study relies on data sources embedded in normally required course work (with the new intervention becoming part of normal course work), we guarantee anonymity and confidentiality, and students only need to consent to their data being used in the analysis, we used an exempt IRB protocol applied to low risk studies in an educational context. To be fully aware of all research compliance issues, we recommend that anyone pursuing this type of inquiry consult with the IRB office at their institution before proceeding.

- Actions: If the self-study materials are revealed to be inadequately effective, we have to look for ways to revise them and make them more effective, for example by shortening them, breaking them into smaller bits, adding examples or exercises, or converting them to traditional lectures. If the post-quizzes do not appear to improve retention of self-study materials, we have to consider withdrawing the intervention and trying alternative incentives and assessment strategies. If we get positive results, we will retain the interventions, keep measuring, and fine-tune the strategy with an eye to further improve student outcomes.

Status

We are in the middle of conducting the TAR study. Our results should be available by early Spring. Stay tuned for a sneak peek.

Acknowledgements

We are grateful to the Eberly Center staff Drs. Chad Hershock and Soniya Gadgil-Sharma for their guidance and help in designing the TAR study. Judy Books suggested the low-stakes assessment strategy. The section explaining the TAR approach is drawn from Eberly Center workshop materials.

Further Information

For further information on the TAR approach, visit the related page by Center for the Integration of Research, Teaching and Learning. CIRTL is an NSF-funded network for learning and teaching in higher education.