by Hakan Erdogmus*, Soniya Gadgil**, and Cécile Péraire*

*Carnegie Mellon University, Electrical and Computer Engineering

**Carnegie Mellon University, Eberly Center

Background

A previous post talked about a Teaching-As-Research project that we had initiated with CMU’s Eberly Center for Teaching Excellence & Educational Innovation to assess a new teaching intervention.

In our flipped-classroom course on Foundations of Software Engineering, we employ instructional materials (videos and readings) that the students are required to review each week before attending in-class sessions. During the in-class sessions, we normally perform a team activity based on the off-class material.

To better incentivize the students to review the materials before coming to class, in Fall 2017, we decided to introduce a set of low-stakes, just-in-time online assessments. First, we embedded short, online quizzes to each weekly module that the students took before and after covering a module (we called these pre-prep and post-prep quizzes). We also gave the students, at the beginning of each live session, an extra quiz on the same topics (we called this third component an in-class Q&A.). All the quizzes had 5 to 11 multiple-choice or multi-answer questions that were automatically graded.

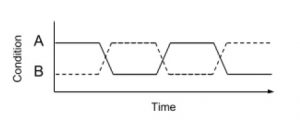

We deployed a total of 13 such triplets of quizzes (39 total), except we deployed the prep quizzes to alternating groups of students each week starting on week 2. The students were separated into two sections: one week, the first section received the prep quizzes, and the next week, the second section received them. All students received the in-class Q&As, after which the correct answers were discussed with active participation from the students. All components were mandatory in that the students received points for completing the assigned quizzes. However the actual scores did not matter: the points counted only toward a student’s participation grade. The quizzes were thus considered low-stakes.

Research Questions

Our main research question was:

RQ1: How does receiving an embedded assessment before and after reviewing instructional material impact students’ learning of concepts targeted in the material?

To answer the research question, we tested two hypotheses:

H1.1: Students who receive the prep quizzes before and after reviewing instructional materials will score higher on a following in-class Q&A on the same topic.

H1.2: On the final exam, students will perform better on questions based on topics for which they had received prep quizzes, compared to questions based on topics for which they had not received prep quizzes.

As a side research question, we wanted to gauge the overall effectiveness of the instructional materials, leading to a third hypothesis:

RQ2: Do the instructional materials improve the students’ learning?

H2: Students’ post-prep quiz scores on average will be higher than their pre-prep quiz scores.

Results

Pre to Post Gain

First we answer RQ2. Prep quizzes were embedded for twelve of the thirteen modules. Overall all students who took the prep quizzes improved significantly from the pre-prep to the post-prep quiz. The average scores and standard deviations were as follows:

| Quiz timing |

Average Score (Standard Deviation) |

| Pre-prep quiz |

53% (21%) |

| Post-prep quiz |

71% (18%) |

We had a total of 559 observations in this sample. The average 34% gain was statistically significant with a p-value of .002 according to the paired t-test (dof = 559, t-statistic =10.39). This result suggests that the students overall had some familiarity of the topics covered, but the instructional materials also contained new information that increased the scores.

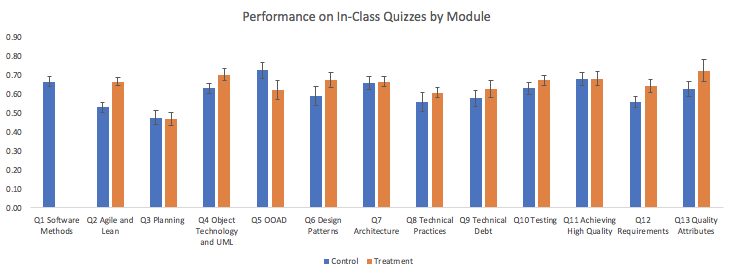

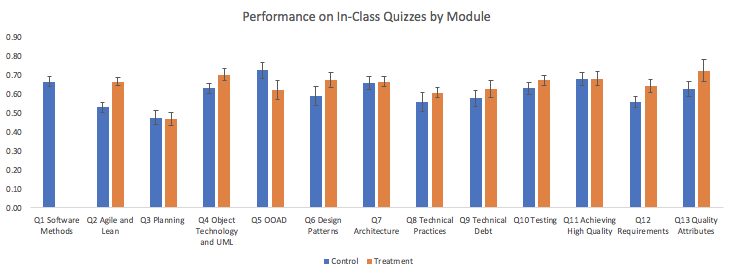

Performance on In-Class Q&As

We compared the average in-class Q&A scores of students who completed both pre-prep and post-prep quizzes (Treatment Group) to that of those who did not receive the prep quizzes (Control Group). The average scores of the two groups were as follows:

| Group (Sample Size) |

In-Class Q&A Score |

| Treatment: with prep quizzes (258) |

64.6% |

| Control: without prep-quizzes (349) |

61.2 % |

The prep quizzes impacted the students’ learning as measured by their in-class Q&A scores: the improvement from 61.2% to 64.6% was statistically significant according to the independent samples t-test with a p-value of 0.02 (dof = 605, t-statistic = 2.36). However the effect size, as measured by Cohen’s d = 0.19, was small. Module-by-module improvements were as follows:

Notably, for the quiz Q5 on Object-Oriented Analysis & Design, the control group counterintuitively performed significantly better. We will have to investigate this outlier in the next round.

Final Exam Performance

For the final exam, each exam question was mapped to the topic the content of which the question assessed. Each student’s score was tagged with a code depending on whether or not the student was in the Control Group or the Treatment Group for that topic according to the associated prep quizzes. Average scores for control topics and treatment topics were computed for each student first, and then an overall average was computed for each control topic and treatment topic for the whole class. The results were as follows for the two groups:

| Group |

Average Score on Final Quiz (Standard Deviation) |

| Treatment: with prep quizzes |

58% (17%) |

| Control: without prep-quizzes |

60% (15%) |

The differences were not significant according to the independent t-test (dof = 51, t-statistic = .64 , p-value = .525). So the prep quizzes embedded in the instructional material did not have any discernible effect on the final exam.

Expectations and Surprises

We were a bit surprised that that the post-prep scores of the students had a low average of 70% and the improvement was only 34%. We would have expected an average more in the range 75-85% corresponding to an improvement rate of over 50% relative to the pre-prep quiz.

The impact of prep quizzes on in-class Q&As were also below expectations even though, for each topic, both types of assessments had similar questions. One reason might have been the lack of feedback on correct answers in the prep quizzes. After the post-prep quiz, the students could see which answers they got wrong, but the correct answers were not revealed since they would be discussed during the live session after the corresponding in-class Q&A.

The lack of impact on final exam scores were not too surprising though. The prep quizzes and in-class Q&As had questions more at low cognitive levels (knowledge and comprehension in Bloom’s taxonomy), while the final exam had questions at higher cognitive levels (application, analysis, and synthesis in Bloom’s taxonomy). Also the final exam is separated significantly in time from the prep quizzes (depending on the topic, from three to 13 weeks), with the possible effect of knowledge loss forcing all students re-review the instructional materials to prepare for the final exam irrespective of whether they had taken the prep quizzes.

What Next?

We have been collecting more data in the subsequent offering of the course, with minor modifications that address some of the possible drawbacks of the first round and to yield further information on certain observed effects.

First, we have incorporated better feedback mechanisms to the post-prep questions so that, after completing a quiz, the students could not only see the wrong answers, but also the correct answers. We will see whether the new feedback will affect their in-class Q&A performance.

Second, we will incorporate some low-cognitive-level questions to the final exam resembling those of the prep quizzes and in-class Q&As. We hope that this change will reveal information on whether time separation or question complexity is a more important factor in erasing the effect of just-in-time low-stakes assessment.

Finally, we will have to dig deeper to see why the instructional materials were not as effective as we had hoped as measured by the post-prep quiz scores. Were they not enough of an incentive for the students to review the instructional materials? Were the questions misaligned with the instructional materials? Or were the instructional materials not well designed in the first place to achieve their learning objectives? We will try to correlate prep quiz assignments with actual statistics on video viewing and reading material downloads.

Stay tuned!

I was asked to share some of the pedagogical innovations from two books I recommended once during a talk: Pedagogical Patterns and Training from the Back of the Room. In this post I will focus on Pedagogical Patterns, and leave Training from the Back of the Room for my next post. I will provide an overview of the book and share the insights that I have put in practice. I hope you will be tempted to read them and apply some of these ideas.

I was asked to share some of the pedagogical innovations from two books I recommended once during a talk: Pedagogical Patterns and Training from the Back of the Room. In this post I will focus on Pedagogical Patterns, and leave Training from the Back of the Room for my next post. I will provide an overview of the book and share the insights that I have put in practice. I hope you will be tempted to read them and apply some of these ideas.